Fault Tolerance

After decades of research, a reliable earthquake prediction system remains elusive as ever. Michael Blanpied isn’t worried.

Michael Blanpied ’85 ScM, ’89 PhD, was just wrapping up for the day in his basement laboratory in Menlo Park, California, when he felt the tremors. As a first-year research scientist at the U.S. Geological Survey (USGS), Blanpied spent his days studying how earthquakes begin, a research topic that had occupied him for the past decade as a geophysics student at Yale and Brown. Blanpied could mathematically describe how the tiniest fault slip could grow into a devastating earthquake, but until that Tuesday afternoon in early October 1989 he had never personally experienced the sensation of the ground trembling beneath his feet. Blanpied gripped a filing cabinet as the quakes swelled into a crescendo and a phenomenon he knew only in theory overwhelmed him with the raw power of its reality.

And just as suddenly as it had started, Blanpied’s first brush with an earthquake was over. The entire ordeal had lasted just 15 seconds. Blanpied never even had the time to get scared. “It was alarming, but I didn’t feel physically in danger,” he recalls. Most of his colleagues at USGS had already left the lab early to watch the third game of the World Series between the Oakland Athletics and San Francisco Giants, and as the lights in the basement flickered off, Blanpied’s immediate concerns were whether the shaking and power loss had destroyed the precious experimental data on his computer. It was only when he ventured above ground that he realized he had experienced a historic moment.

“This was my first real earthquake and I didn’t know from personal experience just how big or close it was,” Blanpied says. “We didn’t have instant information or cell phones at the time, but once I was above ground and started running into people I realized that this was big.”

Blanpied had just lived through the Loma Prieta earthquake, one of the most powerful temblors ever recorded in California and among the most destructive to ever occur in the United States. The 6.9 magnitude earthquake began in the Santa Cruz mountains just south of San Francisco and sent shockwaves rippling through the Bay Area, where they destroyed bridges, buildings, and freeways. All told, the earthquake caused around $10 billion in damage, nearly 4,000 injuries, and 63 deaths.

Loma Prieta was a disaster, but for the geosciences community it was also an opportunity. Investments in earthquake research and response capabilities tend to spike in the aftermath of a massive quake, creating a boom-bust cycle marked by disasters and renaissances in seismology. The infamous 1906 quake in San Francisco—which remains the most destructive in America’s history—resulted in research that revolutionized our understanding of plate tectonics. The devastating Sylmar earthquake in 1971 near Los Angeles transformed building codes in the state and led to greater public safety measures for earthquakes. Loma Prieta, in this respect, was no different.

In the aftermath of the quake, the USGS received a significant boost in funding from Congress with a mandate to closely integrate a number of regional earthquake monitoring and research efforts to create a truly national earthquake hazard program. Loma Prieta also brought renewed public interest in earthquake prediction, which has always been an elusive north star for the field. Flush with Congressional funding and emboldened by a suite of sophisticated new technologies such as GPS satellites and real-time seismic networks, USGS scientists rekindled hope that one day soon predicting earthquakes would be as routine as predicting the weather.

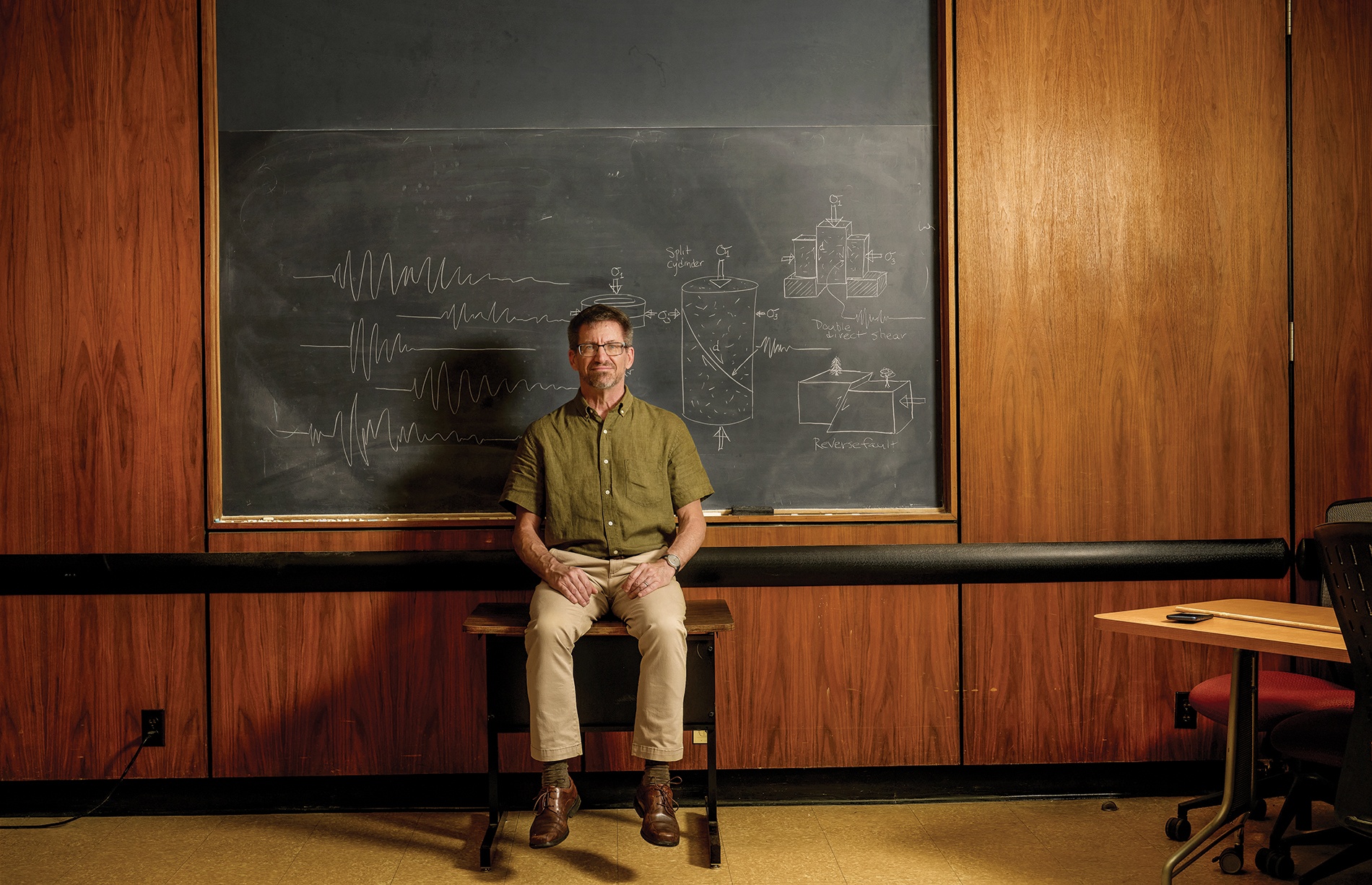

Earthquake prediction—or, as Blanpied calls it, the “P-word”—was a touchy subject that pitted the old hands against the young turks at the USGS. One side had had their optimism crushed by decades of lackluster progress and believed earthquake prediction was a fool’s errand. The other believed that new technologies had brought us to the cusp of predicting one of the most mysterious forces in nature. It’s a debate that continues to this day and Blanpied—first as a young scientist and now as the associate coordinator of the USGS Earthquake Hazards Program—has had a front row seat for it all.

Strange Vibrations

The ground beneath our feet is in constant motion. Californians experience this often, and Northeasterners were reminded in April when a 4.8 quake hit New Jersey. As you read this, there are massive blocks of rock in Earth’s crust slipping and sliding past one another along fractures known as faults. These rocks in the crust move at a glacial pace measured in centimeters per year, carried like ships upon the molten rock of Earth’s mantle. This subterranean ballet is totally inaccessible to direct observation, a fundamental challenge that links seismology with astronomy and other scientific disciplines that are wholly reliant on remote sensing. It is only when the rocks slip against each other in just the right way to produce an earthquake that geoscientists can infer what is happening deep below the surface. But not every slip along a fault results in an earthquake and not every earthquake has the power to level entire towns. The vexing question for geophysicists—and the key to earthquake prediction—is why. Blanpied has spent his career searching for an answer.

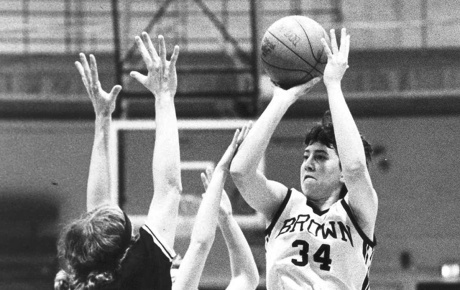

After getting an undergraduate degree in geophysics and geology from Yale, Blanpied came to Brown University in 1983 to study under Terry Tullis, now a professor emeritus of geological sciences, and a pioneer of experimental rock mechanics. When most people think of geologists, they envision someone out in nature collecting samples and studying the inscrutable language of rocks carved in the landscape. That’s what Blanpied had in mind, too, when he first arrived at Brown and signed on to Tullis’s USGS research project studying small faults in the hills of Pennsylvania.

Although Blanpied quickly discovered that he didn’t have much of a knack for field work, he proved to be an adept experimentalist and quickly made a home for himself in Tullis’s laboratory. For the next six years, Blanpied spent countless days grinding small slabs of granite against each other in a special experimental device custom-built by Tullis. His primary objective was to understand how faults grow and evolve over time. He was, in effect, replicating the same processes that kickstart a real earthquake along fault lines that can stretch for hundreds of miles in a machine small enough to fit within a small lab.

“All earthquakes start small with a microscopic transition along a fault,” Blanpied says. “They’re tricky to study because we can’t see them, but we can reproduce the temperatures and pressures those rocks experience at depth in the lab in a very controlled way.”

In the mid-1980s, when Blanpied was conducting his experiments at Brown, earthquake prediction was still a distant dream. Many of his peers believed that it was, in principle, possible to say with some precision when and where an earthquake would strike. But the scientific knowledge—to say nothing of the technology—just wasn’t there yet. As an example, Blanpied described how in the mid-20th century the best models for earthquakes essentially reduced the instigating rocks to uniform flat rectangles so they could mathematically describe the stresses that built up as those rocks slid against each other. As more data was collected, it became distressingly clear that these simplified models hardly captured the immense complexity of earthquake dynamics.

“We realize now that the details of the earthquake source and what’s going on in the faults is complex over a variety of scales,” Blanpied says. “We can’t possibly observe it at all scales for every fault on a planetary scale, so we’re left with this conundrum of trying to understand the behavior of earthquakes when we can’t actually do the fine-scale measurements we’d need to really predict them.”

Still, it seemed plausible that the data that Blanpied and other experimental geophysicists were generating in a lab could be the seeds that would one day grow into an earthquake prediction model. But lab data on earthquake initiation was only one half of the solution. If earthquake prediction was ever going to become a reality it was clear that it would require a lot more real world data—and the Loma Prieta earthquake provided the perfect pretext to go collect it.

Who Killed Earthquake Prediction?

Parkfield, California, is a small ranching town midway between San Francisco and Los Angeles where cattle outnumber residents by a significant margin. This isn’t particularly unusual in that part of Monterey County, but what makes Parkfield unique is that it is one of the most earthquake-prone locations in the United States. Directly on top of the San Andreas fault, Parkfield gets a magnitude six or greater earthquake roughly every 22 years, earning it the nickname of the “Earthquake Capital of the World.”

“We’re left with this conundrum of trying to understand the behavior of earthquakes when we can’t actually do the fine-scale measurements we’d need to really predict them.”

In the early 1980s, after studying Parkfield’s oddly regular seismic activity for years, scientists at the USGS made an official prediction that Parkfield would experience at least a magnitude six earthquake in 1988, give or take five years. The USGS scientists set up a lot of seismic monitoring equipment around Parkfield and they waited. By the time 1993 rolled around, Parkfield had yet to experience the predicted quake. In fact, the town wouldn’t be rocked by another large earthquake until 2004, more than a decade after the USGS predicted it would happen. “That was really the final nail in the coffin in using patterns of earthquake timing and other easy to measure observables for predicting earthquakes,” Blanpied says. “After that, you wouldn’t say the word ‘prediction’ because you’d be labeled a crackpot.”

By the time Parkfield was finally hit with its next big quake, Blanpied had transitioned from working as a USGS scientist to his current position as the associate program coordinator for the USGS Earthquake Hazards Program. Originally established as part of a pan-agency federal initiative called the National Earthquake Hazard Reductions Program in the late 1970s, the USGS Earthquake Hazards Program sits at the intersection of research and science-driven policy—what Blanpied calls “applied Earth science.” In partnership with collaborators at the National Science Foundation, National Institute of Standards and Technology, Federal Emergency Management Agency, and other federal organizations, USGS scientists provide guidance on earthquake occurrences and their effects using data sourced from a global monitoring system.

As the incoming associate coordinator for the USGS earthquake hazards program, Blanpied found himself in the middle of a fierce debate over the future of temblor prediction. The ability to predict an earthquake would obviously be a major advantage in advancing the program’s mission of reducing risks to life and property from quakes. The problem, however, was that no one seemed to agree on whether this was a goal that was practically achievable. The failed Parkfield experiment notwithstanding, there was still a strong contingent of earthquake researchers who believed that prediction was possible by creating sophisticated computer models using a combination of experimental and real-world data. With satellites, seismometer networks on the ocean floor, and computers capable of analyzing these vast troves of data using increasingly sophisticated machine learning techniques, perhaps earthquake prediction wasn’t such a crazy idea after all?

Blanpied isn’t so sure. After two decades helping run the earthquake hazard program, there still hasn’t been any meaningful progress toward a true earthquake prediction system—and it’s unclear if there ever will be. “Some of the smartest earth scientists on the planet have been working on this for decades and they’ve tried innumerable different approaches, but nothing’s worked,” Blanpied says. “Maybe the Earth is putting out an observable signal that would let us make predictions if we knew what to look for, but so far we haven’t seen it.”

But that doesn’t mean all hope is lost. For Blanpied, it’s about shifting emphasis away from prediction to forecasting. It’s a subtle rhetorical difference that has a major impact in terms of how we think about mitigating earthquake risks. Whereas prediction is about trying to name the time, place, and magnitude of a particular temblor in advance, forecasting is about looking at broader patterns of earthquake activities. Rather than trying to predict whether a quake is going to hit Parkfield next year, Blanpied and his colleagues want to use the wealth of new data and computational resources to create a system that will furnish estimates of the likelihood of a quake occuring in a particular area over a time period of decades to years, and eventually over smaller, more precise time periods.

While the lack of specificity might be disappointing to anyone hoping for an earthquake prediction system, Blanpied highlights just how useful a reliable earthquake forecasting system can be in terms of saving lives. With forecasting, the goal is more about improving preparedness for future earthquakes by providing people with the likelihood that a large quake will happen in their region over some time frame. This information can be used by citizens and policymakers to prepare for that eventuality, whether it’s by structurally improving existing infrastructure, strengthening building codes, or bolstering emergency response capabilities.

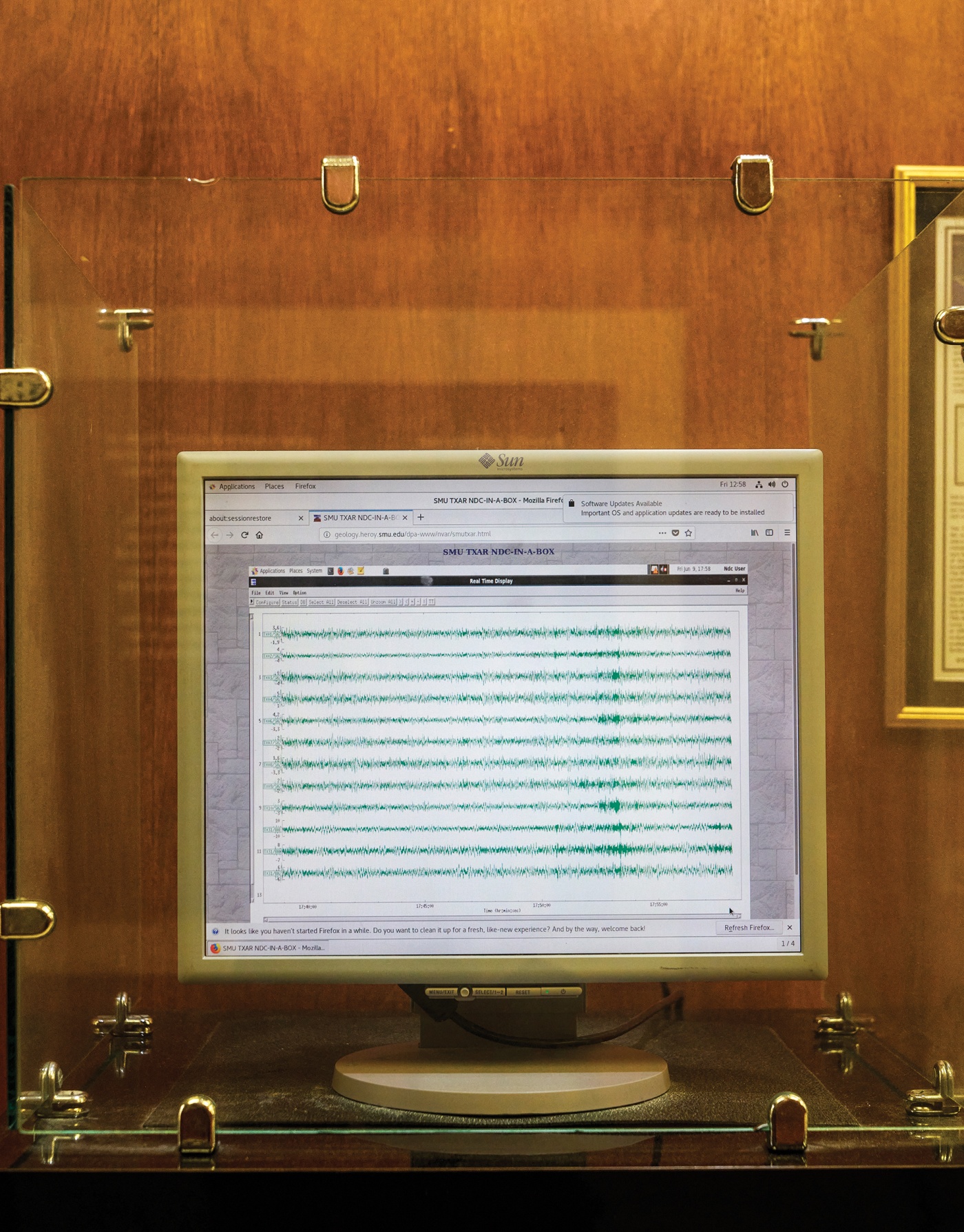

Today, Blanpied and his colleagues at the USGS earthquake hazards program are working on developing a system that will provide continuous aftershock forecasting that will help emergency officials better adapt to the aftershocks that follow a major earthquake. While aftershocks are a normal part of any earthquake, they create significant operational problems for emergency response personnel, who don’t know where those aftershocks will strike or how strong they will be. This complicates basic response matters such as picking an airport to coordinate rescues, planning evacuation routes, or deciding where to stage an ad hoc medical facility for victims.

“Over the next few years, I think we’ll be able to make those real-time forecasts and put out products that answer those particular questions,” Blanpied says. “If people start getting this kind of forecasting information on a regular basis, most of the time it will be kind of boring, like a weather report is now until there’s a storm bearing down. We don’t think much about it, but it helps us calibrate things like whether to carry an umbrella. So my hope is we can get this information about the likelihood of earthquakes and their impacts out to people in ways that speak to them and are useful.”

Daniel Oberhaus is a science writer in Brooklyn and author of The Silicon Shrink, a forthcoming book about AI and psychiatry.