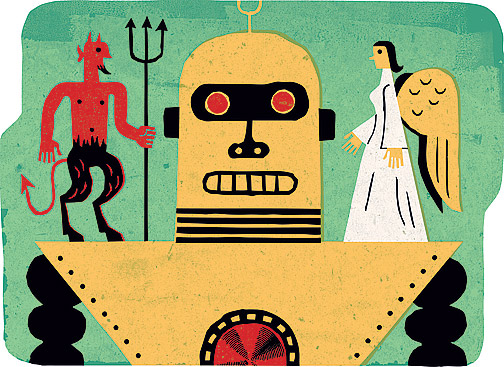

Psychology professor Bertram Malle researches “social cognition,” a blend of psychology and neuroscience that examines how people behave in social situations. He is part of a team of researchers attempting to train robots to make moral decisions, arguing that as robots take over more human tasks, they need at least some “moral competence.”

BAM In what situations are robots faced with moral decisions?

MALLE Even just partly

autonomous robots will quickly get into situations that require moral

considerations. For example, which faintly crying voice from the

earthquake rubble should the rescue robot follow: the child’s or the

older adult’s? What should a medical robot do when a cancer patient

begs for more morphine but the supervisory doctor is not reachable to

approve the request? Should a self-driving car prevent its owner from

taking over manual driving when the owner is drunk but needs to get his

seizuring child to the hospital?

BAM How do you even go about a project like this?

MALLE We are beginning to

formalize a cognitive theory of blame amenable to computational

implementation. And recently we have started to take this cognitive

theory of blame into the social domain, asking when, how, and for what

purposes people express moral criticism—blaming your friend for

stealing a shirt from the department store, for example.

BAM Human morality reduced to a single algorithm or formula?

MALLE It’s a grave mistake

to think of human moral competence as one thing. It would be just as

grave a mistake to build a single moral module in a robot. Things are

beautifully complex when we deal with the human mind. We should expect

no less of the robot mind.

BAM What would you say to people who might be a bit unnerved by the idea of moral robots?

MALLE Consider the

alternative: A robot that takes care of your ailing mother and has no

idea about basic norms of politeness, respect, autonomy, and has no

capacity to make a difficult decision—such as in my example of

dispensing urgently needed pain medication even though the doctor in

charge is not reachable. “Morality” has long been considered unique to

humans. But if we build robots that interact with humans and that have

increasing decision capacity, impact, and duties of care, there is no

alternative to creating “moral” robots. Keeping robots amoral would

simply be unethical.

Illustration by Timothy Cook.